Another issue concerning (training of) ChatGPT:

www.zdnet.com

www.zdnet.com

Q: Can you write me a text in the style of pibbur?

A: pibbuR who says Huh?

Stack Overflow could suspend your account if you change a post to protest OpenAI's deal

One developer who modified his post to include a protest message found his Stack Overflow account suspended for seven days.

www.zdnet.com

www.zdnet.com

- "On Monday, Stack Overflow announced a new deal in which user content would be scooped up by OpenAI to train ChatGPT."

- One developer "protested by changing the content of his questions to say: "I have removed this question in protest of Stack Overflow's decision to partner with OpenAI. This move steals the labor of everyone who contributed to Stack Overflow, with no way to opt-out."

- Stack Overflow didn't accept that, because: "Posts that are potentially useful to others should not be removed except under extraordinary circumstances."

Q: Can you write me a text in the style of pibbur?

A: pibbuR who says Huh?

Last edited:

I don't know what to think. It's tricky.I can understand Stack Overflow's view concerning modifying/deleting existing posts, but I definitely also think that users should have the possibility to opt out, at least for posts made before the deal.

I'm not really against the idea of using people's answers to train some AI since those replies are public and aim at helping programmers in general. SO probably believes that the AI will be able to help, and I just don't believe much in the current form of AI, but that's another matter.

I suppose that GDPR should allow anyone to remove any personal information from the website, but I'm not sure personal answers to questions count. Maybe? On the other hand, removing or altering answers that have been accepted would be a real problem.

There's a very cool safety feature in Rust that I'm not sure exists in other languages.

When you have an object that must meet some requirements before going further, it's possible to use generics in a nice way to detect any misuse at compile time. For example, I have a

I just make my type generic, but I implement it for types that don't hold any data (which exists only in Rust, I believe). Here, I'm declaring the 'fake' types

I decide that

('impl' is the implementation of methods for a type, 'pub' is public)

Other objects that need a normalized version only accept

It's possible to do that using inheritance in OO languages, but it has the cost of vtables for the dynamic polymorphism part - all the methods common to both types. And maybe it's possible to do something similar with C++ templates, but I don't think you can have empty types.

Most of the time, I don't have to specify the generic when I manipulate those objects because the compiler is smart enough to deduce it. There's practically no downside, except you can't store both types in the same array, for example (you can, but you must use heavier artillery).

When you have an object that must meet some requirements before going further, it's possible to use generics in a nice way to detect any misuse at compile time. For example, I have a

Dfa type that stores a finite state machine. It must be normalized before being used by some other types; it's simply to make sure some properties are observed, like end states having IDs greater than non-end states and so on.I just make my type generic, but I implement it for types that don't hold any data (which exists only in Rust, I believe). Here, I'm declaring the 'fake' types

General and Normalized.I decide that

Dfa<General> isn't normalized, and Dfa<Normalized> is guaranteed to be. For example, I won't put any method that allows a modification in the normalized version that could un-normalize it, and you can only get a Dfa<Normalized> from the methods that guarantee the proper requirements.('impl' is the implementation of methods for a type, 'pub' is public)

Code:

pub struct General; // takes 0 bytes

pub struct Normalized; // takes 0 bytes

pub struct Dfa<T> {

... // it can be the same fields for both types or not

}

impl<T> Dfa<T> {

... // all the common methods go here

}

impl Dfa<General> {

// only way to create a Dfa object is through this:

pub fn new() -> Dfa<General> {

...

}

... // all the modifying methods go here

// only way to get a normalized type is throught this:

pub fn normalize(self) -> Dfa<Normalized> {

... // normalization process

}

}Other objects that need a normalized version only accept

Dfa<Normalized> parameters. Still, I can use any method declared in the generic Dfa<T> code, and any mistake like using modifying methods is detected at compile time.It's possible to do that using inheritance in OO languages, but it has the cost of vtables for the dynamic polymorphism part - all the methods common to both types. And maybe it's possible to do something similar with C++ templates, but I don't think you can have empty types.

Most of the time, I don't have to specify the generic when I manipulate those objects because the compiler is smart enough to deduce it. There's practically no downside, except you can't store both types in the same array, for example (you can, but you must use heavier artillery).

SQL co-creator embraces NoSQL

Sometimes your own invention just isn't enough anymore

I agree that SQL is not well suited for everything. But I fear that we may return to how it was before, with a lot of different query languages, one for each database system. That being said, SQL is not completely standardized, there are for instance differences between Oracle SQL and Transact SQL (for MS SQL Server), although the basic parts of the languages are the same.

pibbuR who by extension and mainly looking at the name has high hopes for SQL++. And who, despite the name has used MongoDb. And who thinks Json is ugly.

How Many Languages A Developer Should Know?

Navigating the Multilingual Landscape of Software Development

sotergreco.com

sotergreco.com

"A developer should know all the languages"

Good luck with that. I know (or have learned) 12 of them.

But I agree that Python should be your first language.

pibbuR who still doesn't like Python, mainly for irrational reasons which aren't well documented. He would of course consider Pyhon++ (currently unsupported).

From a discussion on Codeproject (https://www.codeproject.com/Lounge.aspx?msg=6000758#xx6000758xx):

"I am currently listening to J.S. Bach - Brandenburg Concerto No. 2 in F major BWV 1047[^] on repeat while I program. Hearing those violins just sawing away, with that fun, occasional little four-note sequence (bah BAH bah bah..) makes my fingers just fly over the keyboard while I am programming!

According to an article by the NIH: Cognitive Crescendo: How Music Shapes the Brain’s Structure and Function - PMC[^] music stimulates the mind while working on intellectual tasks.

Anyone else have any favorite music they listen to make them write code at hyper speed?"

pibbuR who probably would listen to Motorpsycho, Magma or Dream Theater, UmmaGumma (first record) Never Jazz.

"I am currently listening to J.S. Bach - Brandenburg Concerto No. 2 in F major BWV 1047[^] on repeat while I program. Hearing those violins just sawing away, with that fun, occasional little four-note sequence (bah BAH bah bah..) makes my fingers just fly over the keyboard while I am programming!

According to an article by the NIH: Cognitive Crescendo: How Music Shapes the Brain’s Structure and Function - PMC[^] music stimulates the mind while working on intellectual tasks.

Anyone else have any favorite music they listen to make them write code at hyper speed?"

pibbuR who probably would listen to Motorpsycho, Magma or Dream Theater, UmmaGumma (first record) Never Jazz.

Last edited:

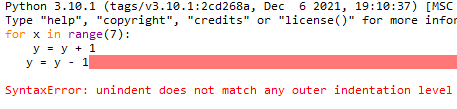

I think i'm going to disagree about python as a first language. Yes python is very powerful no so much because of the language but the available library (this was also true of basic many years ago); and yes python is more structured than basic so one could argue it is a more difficult but more structured first language. However python also has a lot of oops that simply shouldn't exist in a language intended to teach people how to program. My simple example:From a discussion on Codeproject (https://www.codeproject.com/Lounge.aspx?msg=6000758#xx6000758xx):

"I am currently listening to J.S. Bach - Brandenburg Concerto No. 2 in F major BWV 1047[^] on repeat while I program. Hearing those violins just sawing away, with that fun, occasional little four-note sequence (bah BAH bah bah..) makes my fingers just fly over the keyboard while I am programming!

According to an article by the NIH: Cognitive Crescendo: How Music Shapes the Brain’s Structure and Function - PMC[^] music stimulates the mind while working on intellectual tasks.

Anyone else have any favorite music they listen to make them write code at hyper speed?"

pibbuR who probably would listen to Motorpsycho, Magma or Dream Theater, UmmaGumma (first record) Never Jazz.

Code:

for x in range(7):

y = y + 1

y = y - 1

is different than

for x in range(7):

y = y + 1

y = y - 1----

And for a simple program such an error is easy enough to catch but in a much larger body of code well.... of course you might have syntax checkers spit out a warning and such on such syntax but still i hope you see my point.

----

Fortran has such a problem also - the language restricts how long a line can be and i once had to help a lady who had spent 2 days debugging a water shed program in fortran because she exceeded the line length by one character causing a new variable to be introduced....

-

So in this fashion i find both language unsuitable as a teaching aid while both have their places for specific application. I won't even get into that monstrosity of COBOL or APL (APL was also a very powerful language in its time if you could understand the code); I guess in a way my favorite language at the time though not all that useful for my system coding was a specific dialog of LISP; naturally past my time in LISP the developers realize all these nifty structures in other languages and just had to add them to LISP (was that common lisp?) destroying the language.

Last edited:

I do indeed see your point. Actually, the use of indentation to define blocks is one of the things I don't like with Python. Actually, I fail to see what's so difficult with begin..end.

But Python is continuously ranked at the top of the most popular programming languages, and therefore (I assume) the one most sought after when hiring programmers.

But, what language would you recommend?

About LISP: I learned the Scheme dialect at University back then. There is of course also LITHP.

(((((pibbuR)))))

But Python is continuously ranked at the top of the most popular programming languages, and therefore (I assume) the one most sought after when hiring programmers.

But, what language would you recommend?

About LISP: I learned the Scheme dialect at University back then. There is of course also LITHP.

(((((pibbuR)))))

For me, this simple example would be in favour of Python, which has always been designed for clarity. Having a properly indented code is important to avoid misinterpreting it. Be sloppy with the indentation as the 2nd example and Python will yell at you (it's an error, so no risk of misinterpretation here).My simple example:

No, the foremost issue with Python is that it's dynamically typed. They tried to work around it as best as they could by introducing type hints, but it's a band aid on a rotting leg. And it's slow, but that's only a problem when you're crunching a lot of data.

It also has a very big library, so it's not easy to share an application with someone. All you can hope for is that the other person has a compatible version installed.

Not easy to do a recommendation, as it depends on what you want to do and how far you want to go as a learner. I'd say Python or Kotlin.

Python is great as 1st language because it's interactive, relatively intuitive, clear, and gives good habits. The fact variables are dynamically typed isn't a big problem at the beginning. It's well-known and has great environments to play with (see numPi, Jupyter, Matplotlib, ...). Also great free IDEs like PyCharm. Great scratchpad language to do a little something quickly, like processing some data to change the format or extract some information, or even prototyping.

Kotlin is probably the best option in many cases. It's also quite clear and based on a garbage collector (no pointers to complicate and frustrate the learner). It's very powerful, so you can keep it to develop more complex programs. It has a very good and easy support for asynchronous code. It can compile Java bytecode and is compatible with Java libraries, and can be used to write Android apps, for ex. It can also generate Javascript or native code, though with restrictions. Great free IDEs like IntelliJ.

The potential downsides are for more advanced users: Java runtime (the Java flaws have been reduced to a minimum), garbage collector (easy to abuse and get bad habits). Both may considerably slow down applications and make it inadequate for real-time apps like games.

C# is great too, but I don't know the current status on Linux and the portability; it's supposed to be better than before, though. It's a little annoying to type because they've been overdoing the camel casing (unless you're German and used to capitalize every word). Great free IDEs like Visual Studio.

Java ... I don't know. The thing is, it's still widely used, but it hasn't aged well and it's not in good hands. But to learn OO, it's not the worst idea.

Haskell could be interesting to learn the functional approach. It has no real IDE or GUI to play with it, unfortunately, and it feels a little dusty. I would hesitate to advise that as 1st language, but learning the basics should add a nice complement to the programmer's toolbox. LISP, Clojure, or others are probably good alternatives, but the readability isn't great.

Rust gives a nice complement for everything related to code safety and memory management - should give good habits for other pointer-based languages like C/C++. Definitely not advised as 1st language and not fully OO.

C++ remains a good language to learn, too, but it's quite complex for a 1st language (and the makefiles don't help).

The others I can think of are either not mature enough or too old.

Python is great as 1st language because it's interactive, relatively intuitive, clear, and gives good habits. The fact variables are dynamically typed isn't a big problem at the beginning. It's well-known and has great environments to play with (see numPi, Jupyter, Matplotlib, ...). Also great free IDEs like PyCharm. Great scratchpad language to do a little something quickly, like processing some data to change the format or extract some information, or even prototyping.

Kotlin is probably the best option in many cases. It's also quite clear and based on a garbage collector (no pointers to complicate and frustrate the learner). It's very powerful, so you can keep it to develop more complex programs. It has a very good and easy support for asynchronous code. It can compile Java bytecode and is compatible with Java libraries, and can be used to write Android apps, for ex. It can also generate Javascript or native code, though with restrictions. Great free IDEs like IntelliJ.

The potential downsides are for more advanced users: Java runtime (the Java flaws have been reduced to a minimum), garbage collector (easy to abuse and get bad habits). Both may considerably slow down applications and make it inadequate for real-time apps like games.

C# is great too, but I don't know the current status on Linux and the portability; it's supposed to be better than before, though. It's a little annoying to type because they've been overdoing the camel casing (unless you're German and used to capitalize every word). Great free IDEs like Visual Studio.

Java ... I don't know. The thing is, it's still widely used, but it hasn't aged well and it's not in good hands. But to learn OO, it's not the worst idea.

Haskell could be interesting to learn the functional approach. It has no real IDE or GUI to play with it, unfortunately, and it feels a little dusty. I would hesitate to advise that as 1st language, but learning the basics should add a nice complement to the programmer's toolbox. LISP, Clojure, or others are probably good alternatives, but the readability isn't great.

Rust gives a nice complement for everything related to code safety and memory management - should give good habits for other pointer-based languages like C/C++. Definitely not advised as 1st language and not fully OO.

C++ remains a good language to learn, too, but it's quite complex for a 1st language (and the makefiles don't help).

The others I can think of are either not mature enough or too old.

Last edited:

I started with 1 year of Pascal, in high-school, then I moved to a parallel class that did C and C++. It was manageable, and honestly the exotic elements made it a lot more interesting than Pascal.

Little did I know how little of the C++ area we actually were exposed to.

String types? Conceptually you'd think they should not be too complex. In C++?

To be fair, that's a whole lot more than just the language. It's every framework and library under the sun defining its own string type. But the joke wouldn't be as fun.

Little did I know how little of the C++ area we actually were exposed to.

String types? Conceptually you'd think they should not be too complex. In C++?

To be fair, that's a whole lot more than just the language. It's every framework and library under the sun defining its own string type. But the joke wouldn't be as fun.

Here is where we disagree. I would not object if the code BOMBed; the interpreter said hey that line is neither in the for loop nor in the main 'loop'. And in that aspect i would agree with your thoughts as it is sloppy. However since the code is treated as correct but behaves differently this causes correctness issue in a large system. You shouldn't have a correctness issue due to this sort of error (imho).For me, this simple example would be in favour of Python, which has always been designed for clarity. Having a properly indented code is important to avoid misinterpreting it. Be sloppy with the indentation as the 2nd example and Python will yell at you (it's an error, so no risk of misinterpretation here).

Now it would be easy enough for the language to treat it as a fatal error as it should require that the indentation to be consistent at all level IF the indentation has semantic meaning (which it does). I see that as a sloppy defn in the language that makes it a bad candidate for large system.

At least that is my reasoning. Obviously you seem to feel differently and i guess that is fine even if i disagree.

That's what happens here: the interpreter stops and says the indentation is inconsistent. I don't see what more one could wish for. The code is not treated as correct.Here is where we disagree. I would not object if the code BOMBed; the interpreter said hey that line is neither in the for loop nor in the main 'loop'. And in that aspect i would agree with your thoughts as it is sloppy. However since the code is treated as correct but behaves differently this causes correctness issue in a large system. You shouldn't have a correctness issue due to this sort of error (imho).

Now it would be easy enough for the language to treat it as a fatal error as it should require that the indentation to be consistent at all level IF the indentation has semantic meaning (which it does). I see that as a sloppy defn in the language that makes it a bad candidate for large system.

At least that is my reasoning. Obviously you seem to feel differently and i guess that is fine even if i disagree.

Besides, it's really the same as mixing up opening and closing brackets in languages like C or Rust. It happens all the time, but thankfully the compiler can usually detect it.

Hey no fair; they changed the interpreter; the last time i used python it didn't flag the issue but ignored it.That's what happens here: the interpreter stops and says the indentation is inconsistent. I don't see what more one could wish for. The code is not treated as correct.

Besides, it's really the same as mixing up opening and closing brackets in languages like C or Rust. It happens all the time, but thankfully the compiler can usually detect it.

View attachment 5333

I'm happy. My partial parser generator seems to work just fine. The partial lexer generator is working too. They can't read their specifications from a file yet, but that's the fun part: now I can bootstrap each other and iterate. A little manual work will let me create a file parser for the lexer generator, which will in turn help me create a file parser for the parser generator (I know, I know... it's intricate).

The things we have to do, sometimes.

My companion in that quest, this 1000-ish-page brick:

(no, it's not following the D&D ruleset)

The things we have to do, sometimes.

My companion in that quest, this 1000-ish-page brick:

(no, it's not following the D&D ruleset)