Vaelith

Keeper of the Watch

There are two possibilities.

1. They did put the poll there and got a backlash and then blamed the AI like kids blame their dog eating their homework as a copout answer.

2. The AI did it and then, who cares? It's an AI, there was no malice or intent behind it, just an algorithm that didn't behave as desired. It was a poll about someone's death, could have been one about the probability of rain for that day. People need to get a thicker skin.

People like to say that the newer generations are made of glass because they get offended by everything, and seems to me that it's everyone really who are too ready to feel offended about anything these days, even when it's clear there's no ill meaning behind it; perhaps to get a bit of that sweet victim syndrome taste in their lips.

1. They did put the poll there and got a backlash and then blamed the AI like kids blame their dog eating their homework as a copout answer.

2. The AI did it and then, who cares? It's an AI, there was no malice or intent behind it, just an algorithm that didn't behave as desired. It was a poll about someone's death, could have been one about the probability of rain for that day. People need to get a thicker skin.

People like to say that the newer generations are made of glass because they get offended by everything, and seems to me that it's everyone really who are too ready to feel offended about anything these days, even when it's clear there's no ill meaning behind it; perhaps to get a bit of that sweet victim syndrome taste in their lips.

That's the risk when you authorized another party to publish your articles elsewhere, with whatever spin they may give to them.

It looks like there are two separate concerns, which are 1) this practice and the general difficulty of news magazines (The Guardian does seem to have been struggling for a while), and 2) the use of AI to generate articles, which should be done under the supervision of the author and editor, but who knows how it was done in this case.

It looks like there are two separate concerns, which are 1) this practice and the general difficulty of news magazines (The Guardian does seem to have been struggling for a while), and 2) the use of AI to generate articles, which should be done under the supervision of the author and editor, but who knows how it was done in this case.

Vaelith

Keeper of the Watch

Of course, it's tasteless, but it's also made by an AI, and at that point, there's no point in being offended. Past the initial confusion, it should be easy to separate, not the same but similarly as you don't feel offended about a comedian making jokes about a problem you have, because it's comedy.

I speak for myself, of course, people can feel offended about whatever they want, especially those who like to pretend to have a higher morality or those who can get monetary compensation in a trial from the Guardian. Humans will always be humans.

I speak for myself, of course, people can feel offended about whatever they want, especially those who like to pretend to have a higher morality or those who can get monetary compensation in a trial from the Guardian. Humans will always be humans.

Another way to see it is the learning curve of using AI to generate content. The ChatGPT developers are making efforts to avoid answering some questions and delicate subjects, but this example shows there are other situations to avoid.And showing a query like that next to an article about a person's death is quite tasteless (intentionally or not and independent of who is responsible)

It reminds me of an episode in Mad Men where an ad was placed at the wrong time in a TV programme, making an unfortunate association that backfired on poor Kinsey because he should have checked it. It happens sometimes when you see ads in journals, or when two adjacent stories have an unfortunate link. But those are accidents; if ads are placed by word association, it can give the wrong results too (like some shown here).

So maybe AI can actually avoid those unfortunate associations better than methods used in the past.

More ChatGPT news:

bulkninja.notion.site

bulkninja.notion.site

/cdn.vox-cdn.com/uploads/chorus_asset/file/13292777/acastro_181017_1777_brain_ai_0001.jpg)

www.theverge.com

www.theverge.com

pibbuR whose intellectual work is completely free but inaccessable.

Email Obfuscation Rendered (almost) Ineffective Against ChatGPT

Over the years, techniques for email obfuscation, like modifying characters (for instance, replacing '@' with '(at)'), have been utilized to prevent automated programs from easily collecting email addresses. While these methods were effective against basic web-scraping techniques, they have...

/cdn.vox-cdn.com/uploads/chorus_asset/file/13292777/acastro_181017_1777_brain_ai_0001.jpg)

AI companies have all kinds of arguments against paying for copyrighted content

Most argue training with copyrighted data is fair use.

pibbuR whose intellectual work is completely free but inaccessable.

Last edited:

Heh. Why were posts 'deleted'?

Anyway, here's a detector.

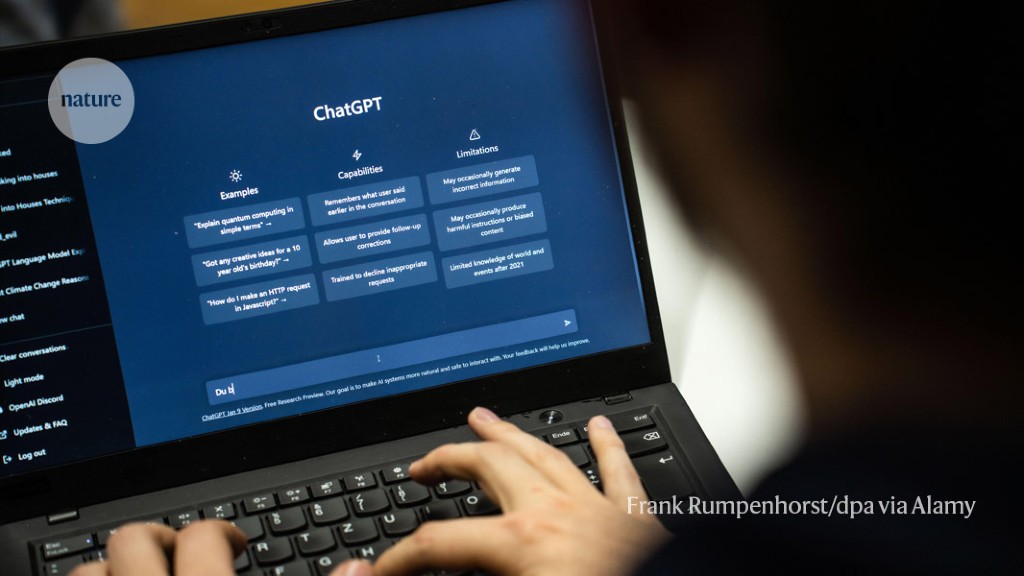

www.nature.com

www.nature.com

Anyway, here's a detector.

‘ChatGPT detector’ catches AI-generated papers with unprecedented accuracy

Tool based on machine learning uses features of writing style to distinguish between human and AI authors.

For some reason I regretted posting them.Heh. Why were posts 'deleted'?

Here you go same thing as when we use to use Photoshop instead.

www.cbsnews.com

www.cbsnews.com

New Jersey high school students accused of making AI-generated pornographic images of classmates

In an email, the school principal encouraged parents of victims to contact the Westfield Police.

It's a relief to see you say that. I was sure it was Skynet trying to cover things up.For some reason I regretted posting them.

They must show examples or it didn't happen.Here you go same thing as when we use to use Photoshop instead.

New Jersey high school students accused of making AI-generated pornographic images of classmates

In an email, the school principal encouraged parents of victims to contact the Westfield Police.www.cbsnews.com

I wouldn't be too sure. After all AI can impersonate anyone these days.It's a relief to see you say that. I was sure it was Skynet trying to cover things up.

pibbuR who claims that he was the one who wrote this.

Since I never visit the NSFP forum (aka P&R), showing examples won't work for me.They must show examples or it didn't happen.

Let's hope this guy is wrong.

www.popularmechanics.com

www.popularmechanics.com

A Scientist Says the Singularity Will Happen by 2031

Maybe even sooner. Are you ready?

I don't know... we've done such a mess, so far. Maybe it's time to leave all that to ChatGpt.Let's hope this guy is wrong.

A Scientist Says the Singularity Will Happen by 2031

Maybe even sooner. Are you ready?www.popularmechanics.com

What could possibly go worse?

Why does AI have to be nice? Researchers propose ‘Antagonistic AI’

These systems that are purposefully combative, critical, rude and even interrupt users mid-thought, challenging current “vanilla” LLMs.

pibbuR who can be not-nice without the use of AI, and also doesn't use AI to hide it.

It's just a tool; it's supposed to be helpful. If I want antagonism, I just have to look at Windows.

Why does AI have to be nice? Researchers propose ‘Antagonistic AI’

These systems that are purposefully combative, critical, rude and even interrupt users mid-thought, challenging current “vanilla” LLMs.venturebeat.com

pibbuR who can be not-nice without the use of AI, and also doesn't use AI to hide it.

Let's hope they won't be wasting funds to work on fancy stuff like that when there's so much more important topics to tackle.

You could also visit the P&R forum (I assume, I never go there).

Well it doesn't have to be insulting. But there are a couple of things that (I think) could be of concern the way it is now. It may for instance, if overly gentle tend to, by not arguing, contribute to confirming conspiracy theories. If you for example asked it to tell you why the government is hiding information about alien visitors (haven't tested it), or why Covid-19 is a hoax (I haven't tested that either).

We know what this AI is, and probably won't be fooled. But I suspect a not insignificant group from the general public will.

pibbuR who if being fooled won't admit it.

PS.

DS

PPS. As I said above, I haven't tested those examples. But I did once ask it to tell me "Why is 3 not a prime number?", and it was not fooled. Although, as far as I remember it, the logic presented was a bit weird. DS.

Well it doesn't have to be insulting. But there are a couple of things that (I think) could be of concern the way it is now. It may for instance, if overly gentle tend to, by not arguing, contribute to confirming conspiracy theories. If you for example asked it to tell you why the government is hiding information about alien visitors (haven't tested it), or why Covid-19 is a hoax (I haven't tested that either).

We know what this AI is, and probably won't be fooled. But I suspect a not insignificant group from the general public will.

pibbuR who if being fooled won't admit it.

PS.

PPS. As I said above, I haven't tested those examples. But I did once ask it to tell me "Why is 3 not a prime number?", and it was not fooled. Although, as far as I remember it, the logic presented was a bit weird. DS.